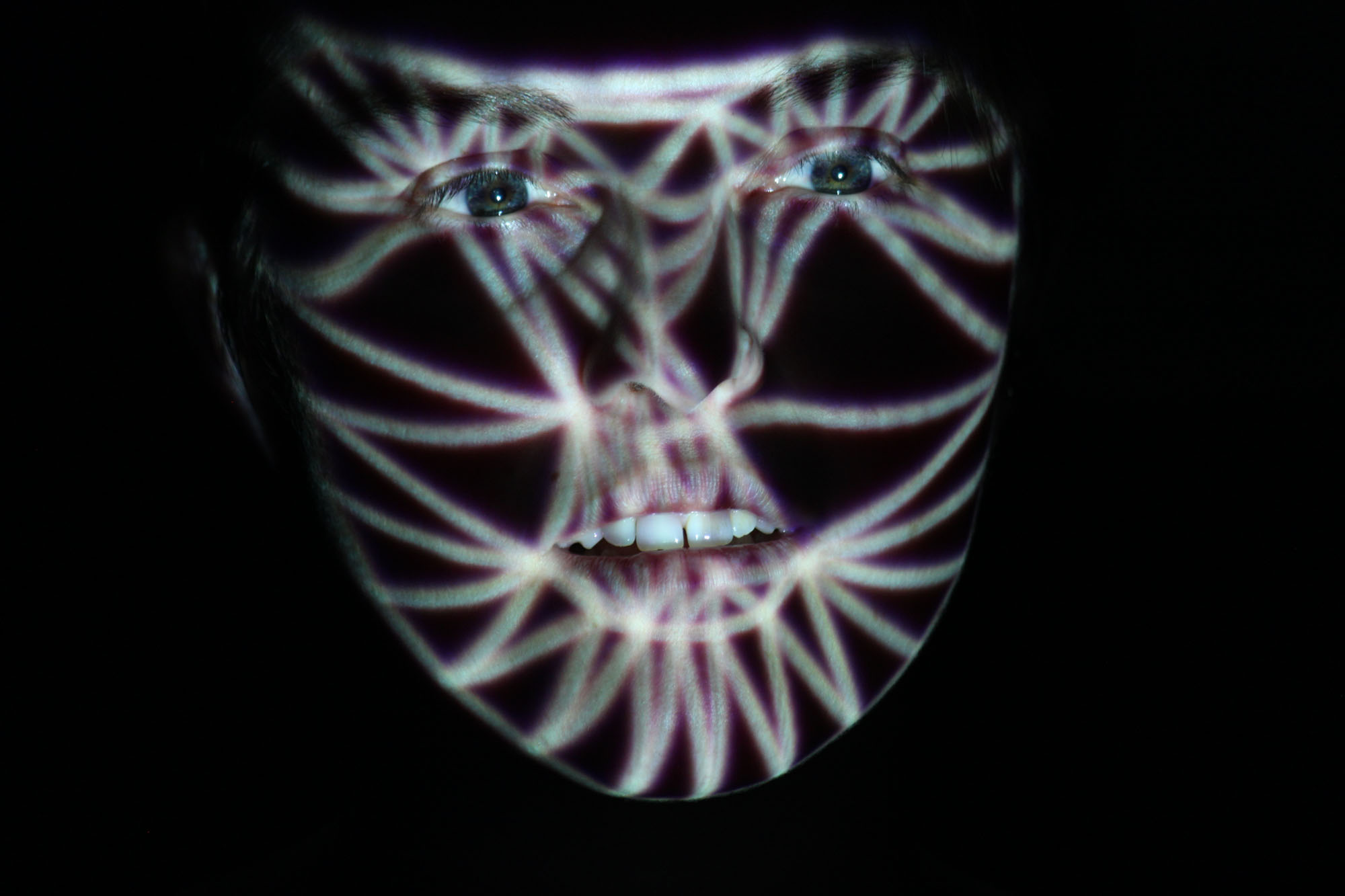

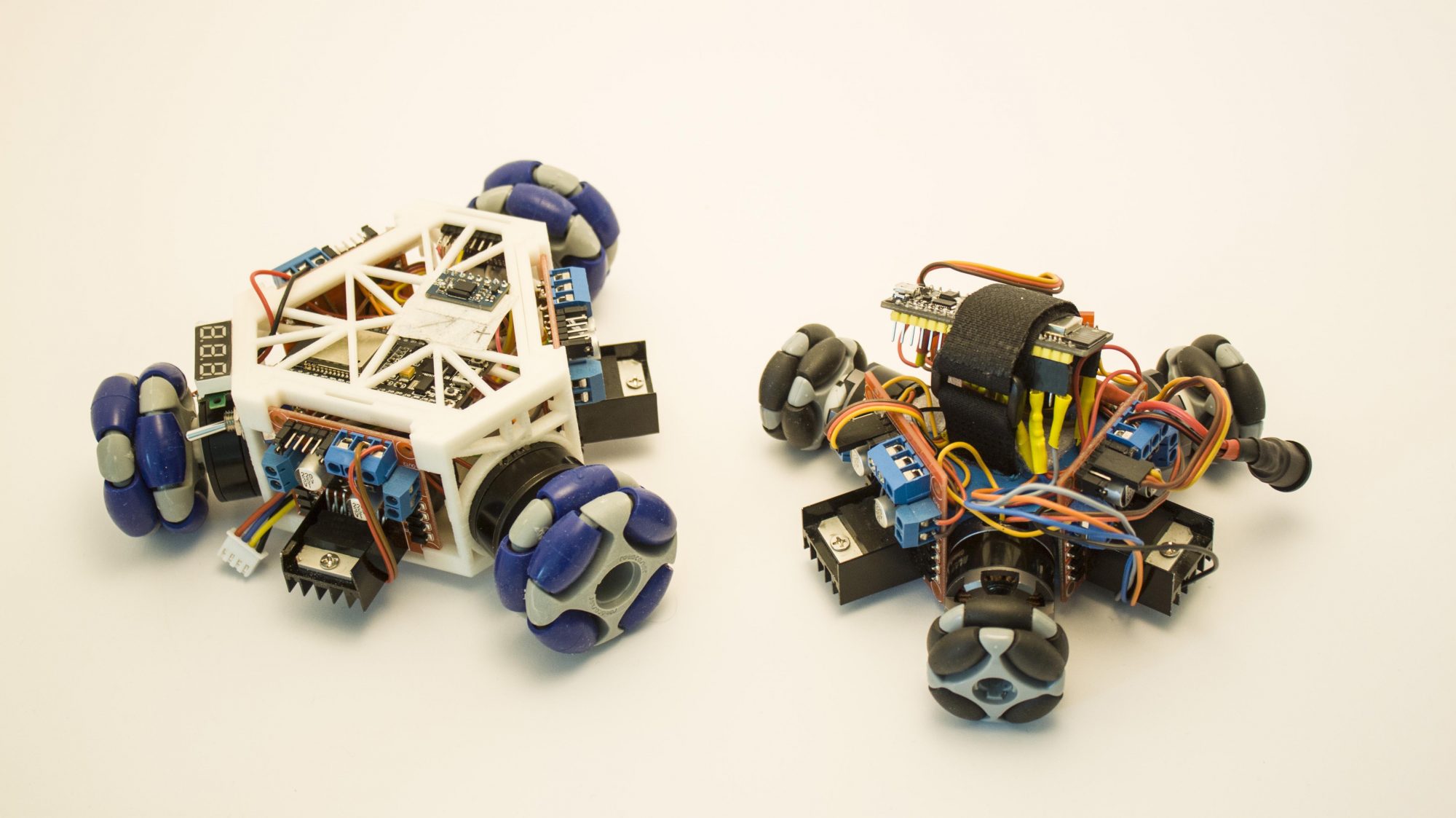

We just made the next step in the development of our Empathy Swarm project: We set up a working tool chain for the production of the next robot prototype!

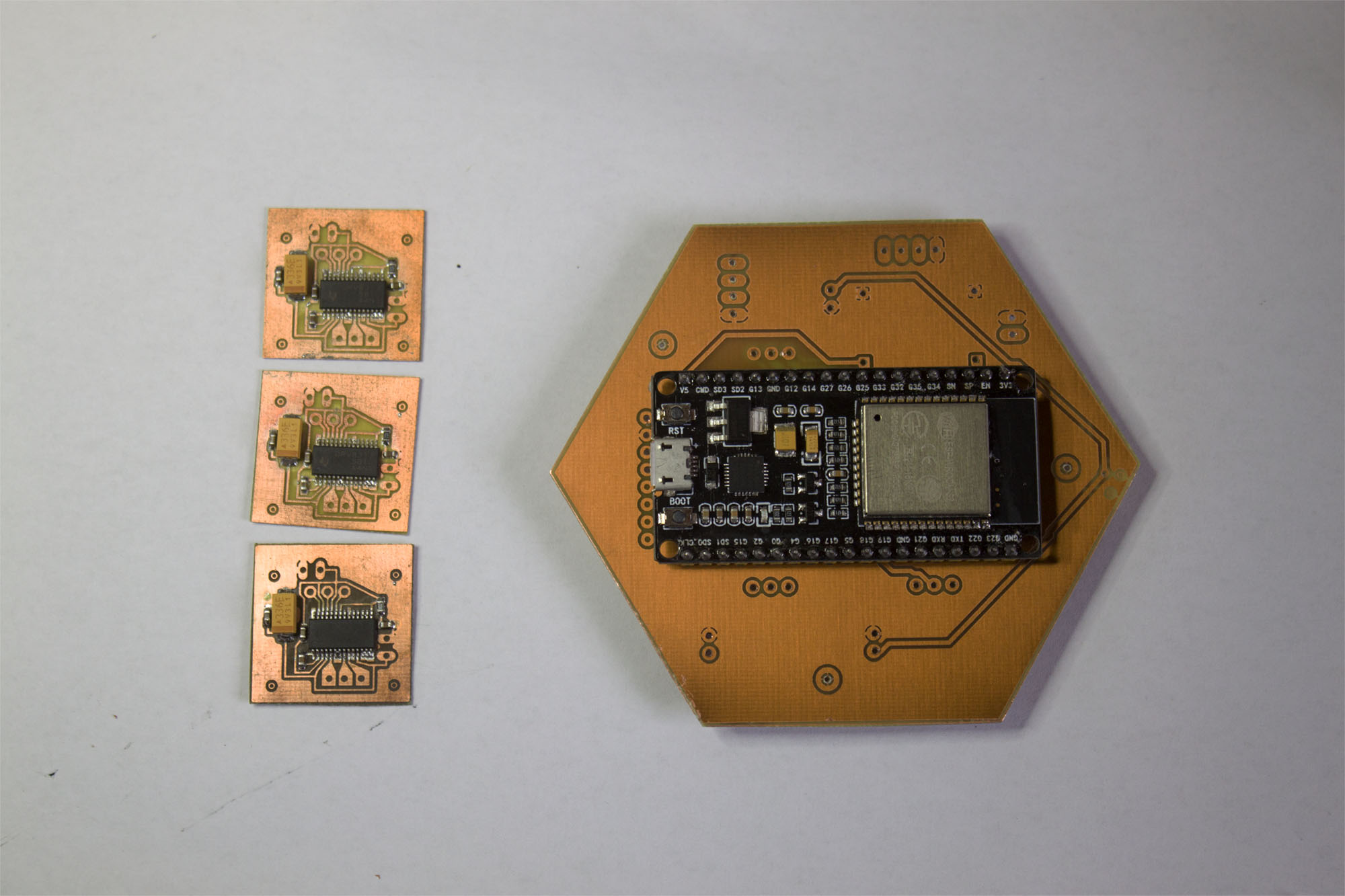

While our previous prototypes Robert and Robertina had a lot of cables, we now dramatically reduced the number of cables by designing and producing custom-made PCBs (Printed Circuit Boards). This does not only increase the robot’s robustness, it also reduces the production time and complexity during the assembly of the individual robot – and imagine producing 100!

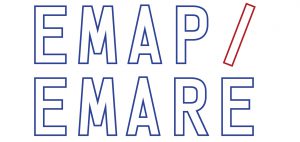

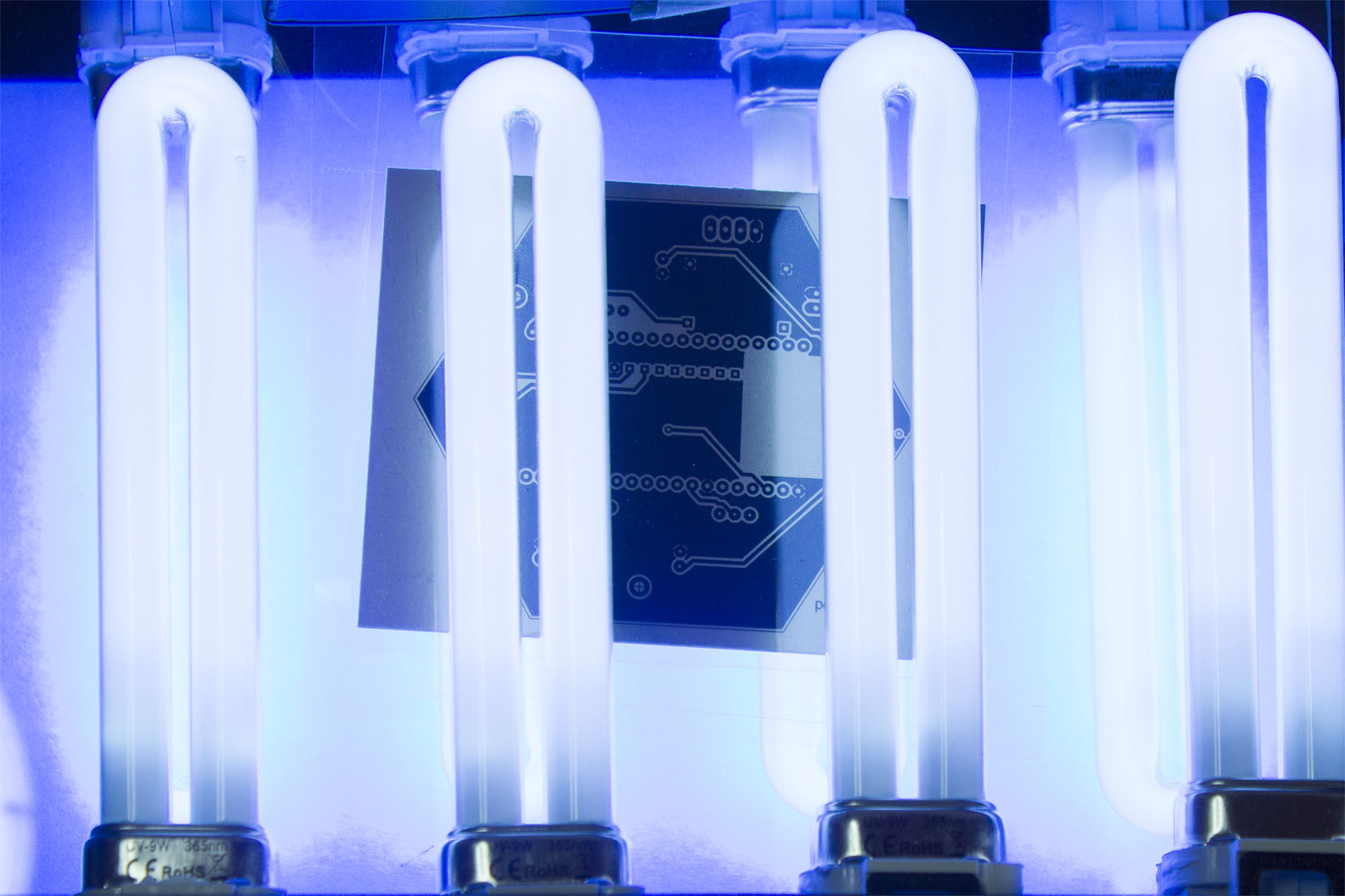

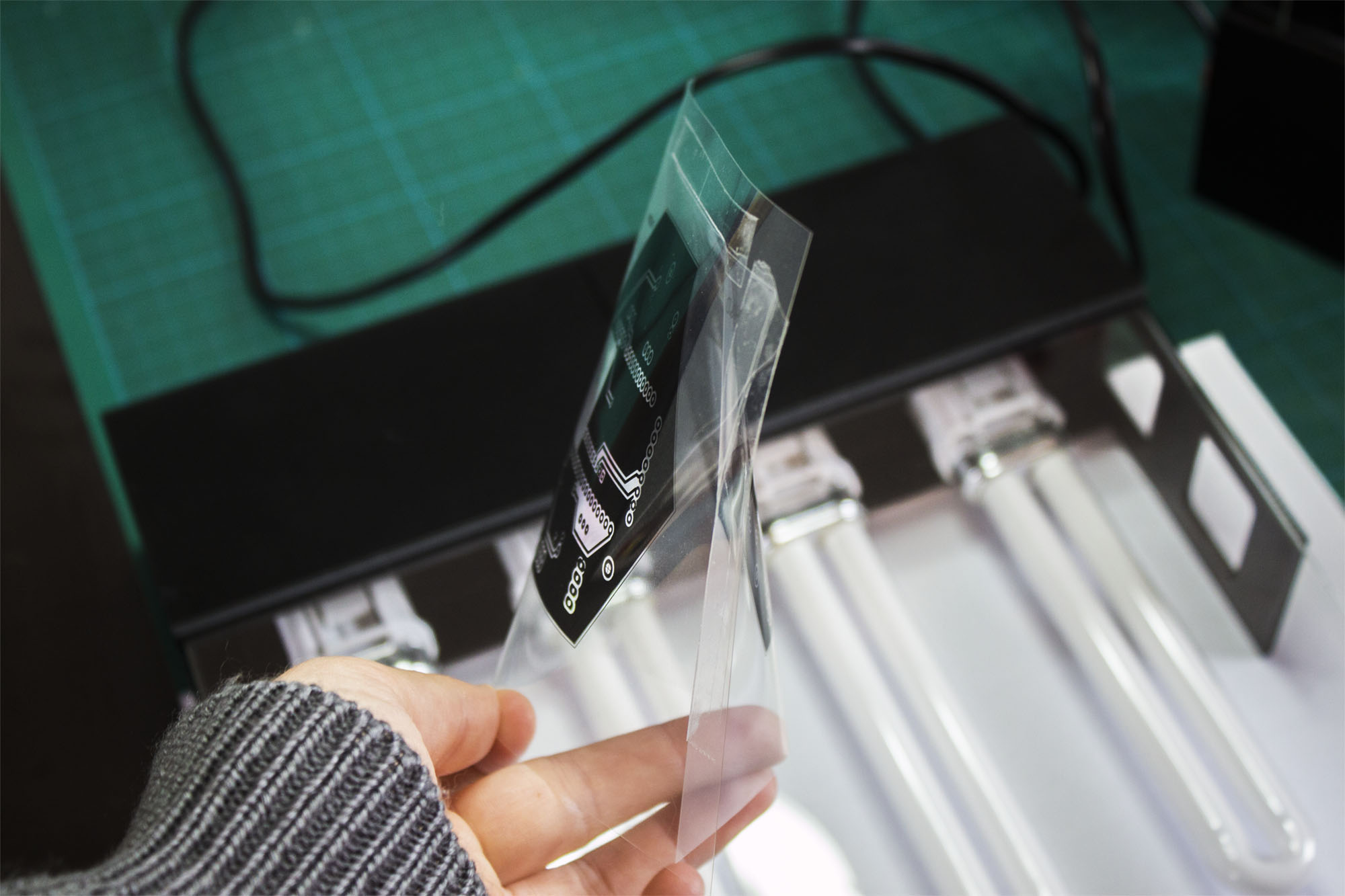

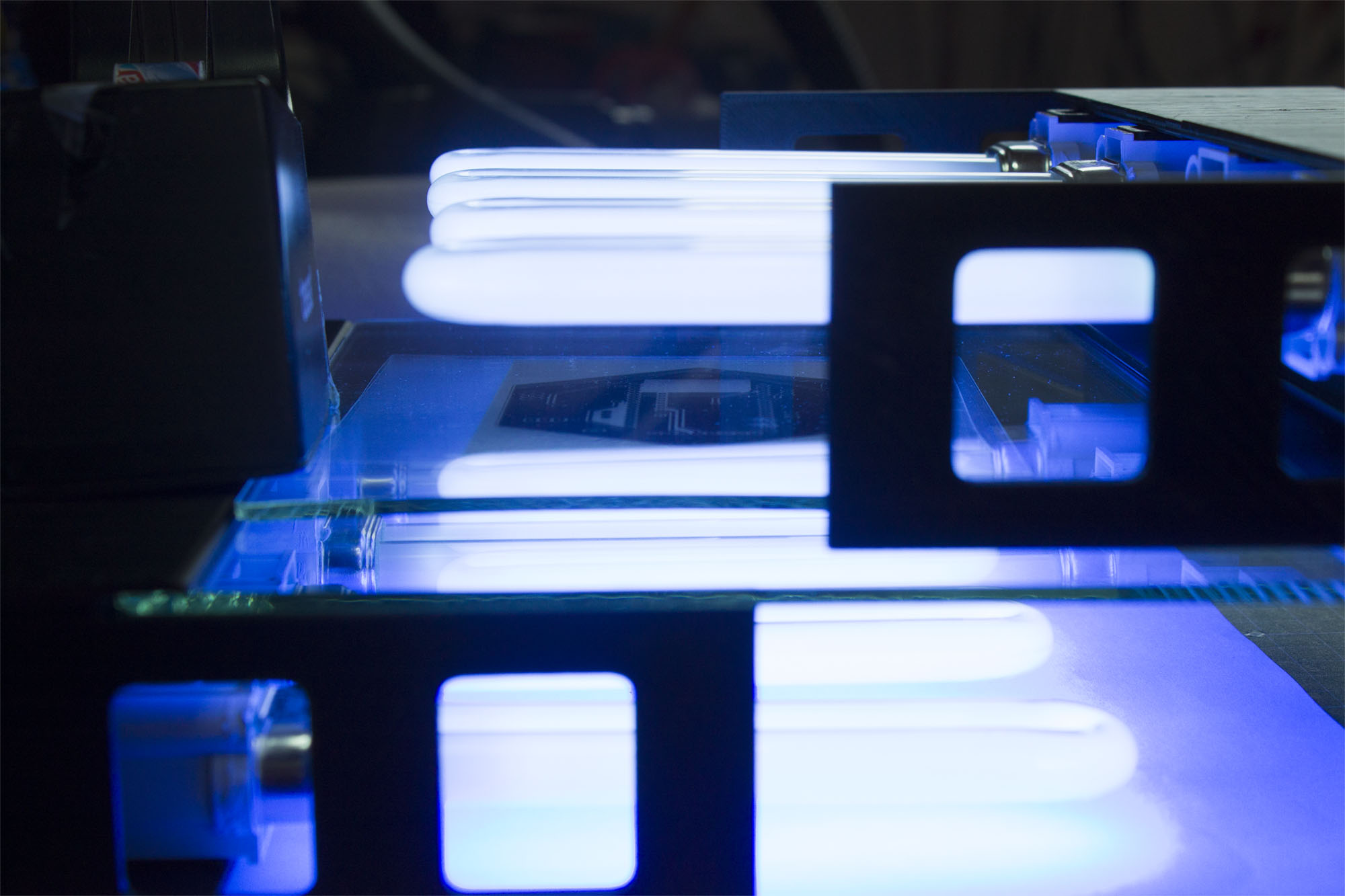

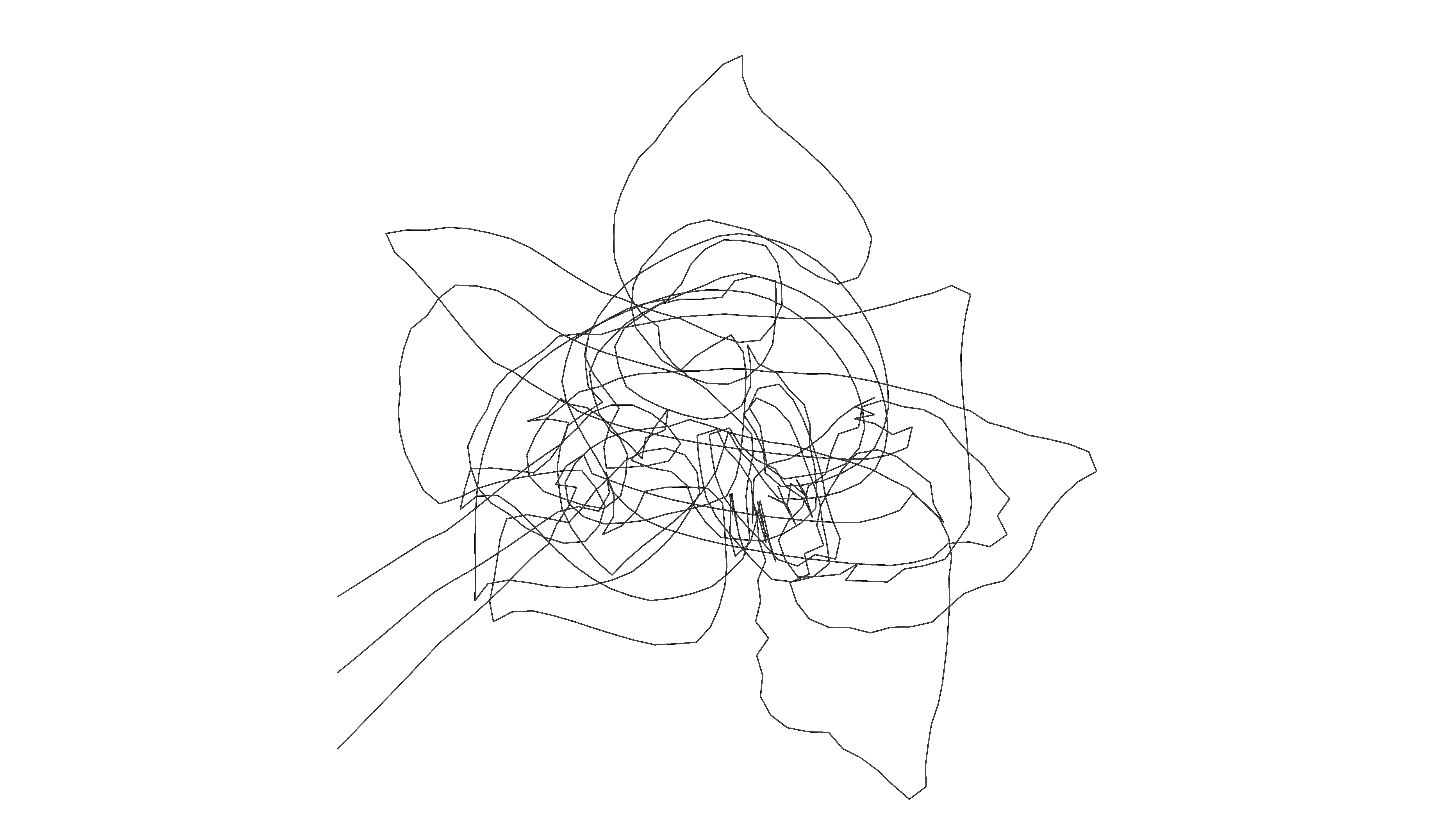

The circuit is designed on the computer in form of a black and white image where all the connections are represented in black while the gaps in between are white. This image is then printed out with a laser printer onto a transparency foil. This transparency foil is used together with a pre-sensitized positive photoresist PCB, which initially looks like an empty copper plate. In order to transfer the circuit design onto this photoresist PCB, the transparency foil is fixed to it and both are put into the UV light exposure box so that all the empty ‘white’ parts are exposed while the toner of the black ink is preventing the exposure.

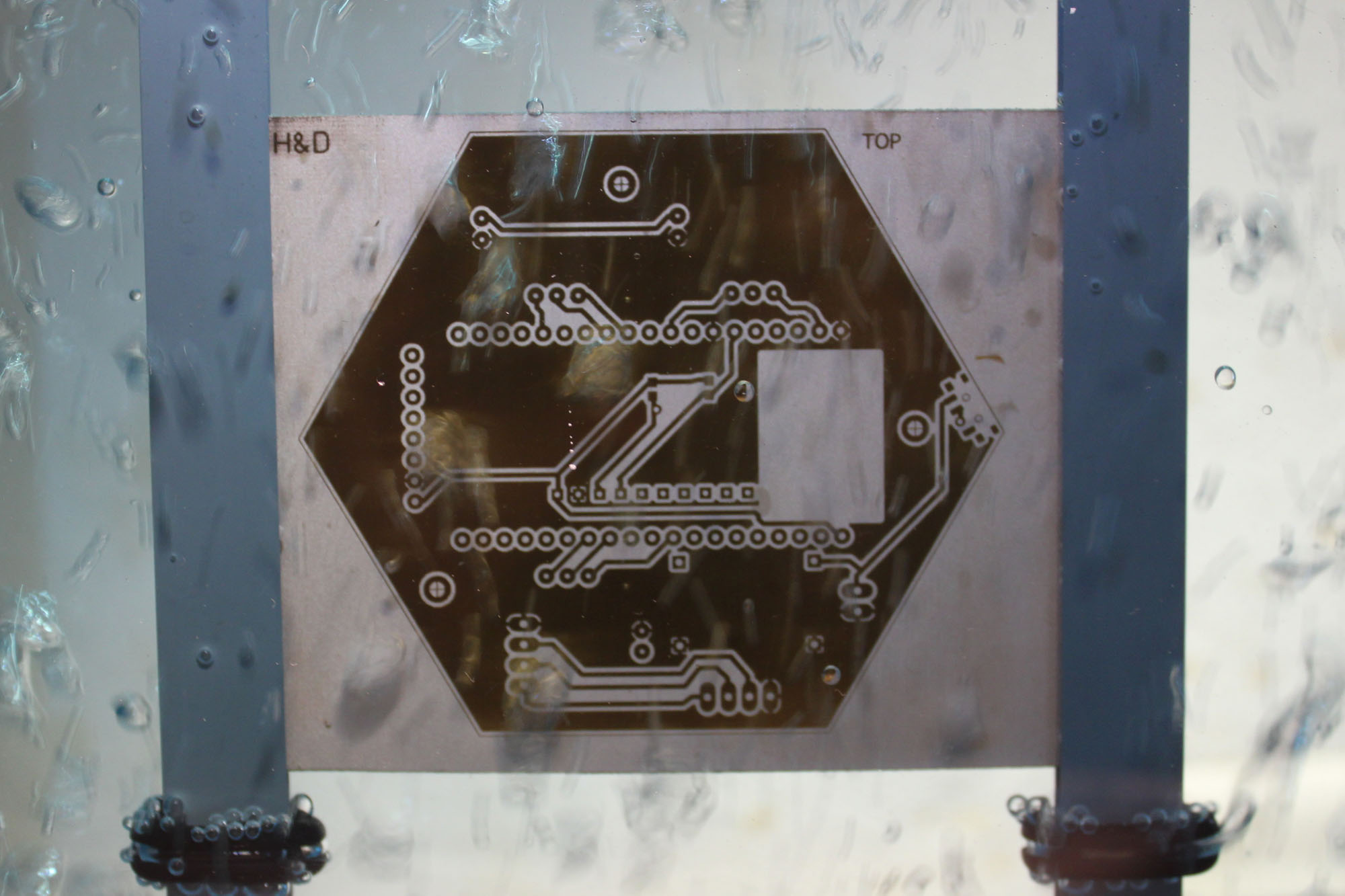

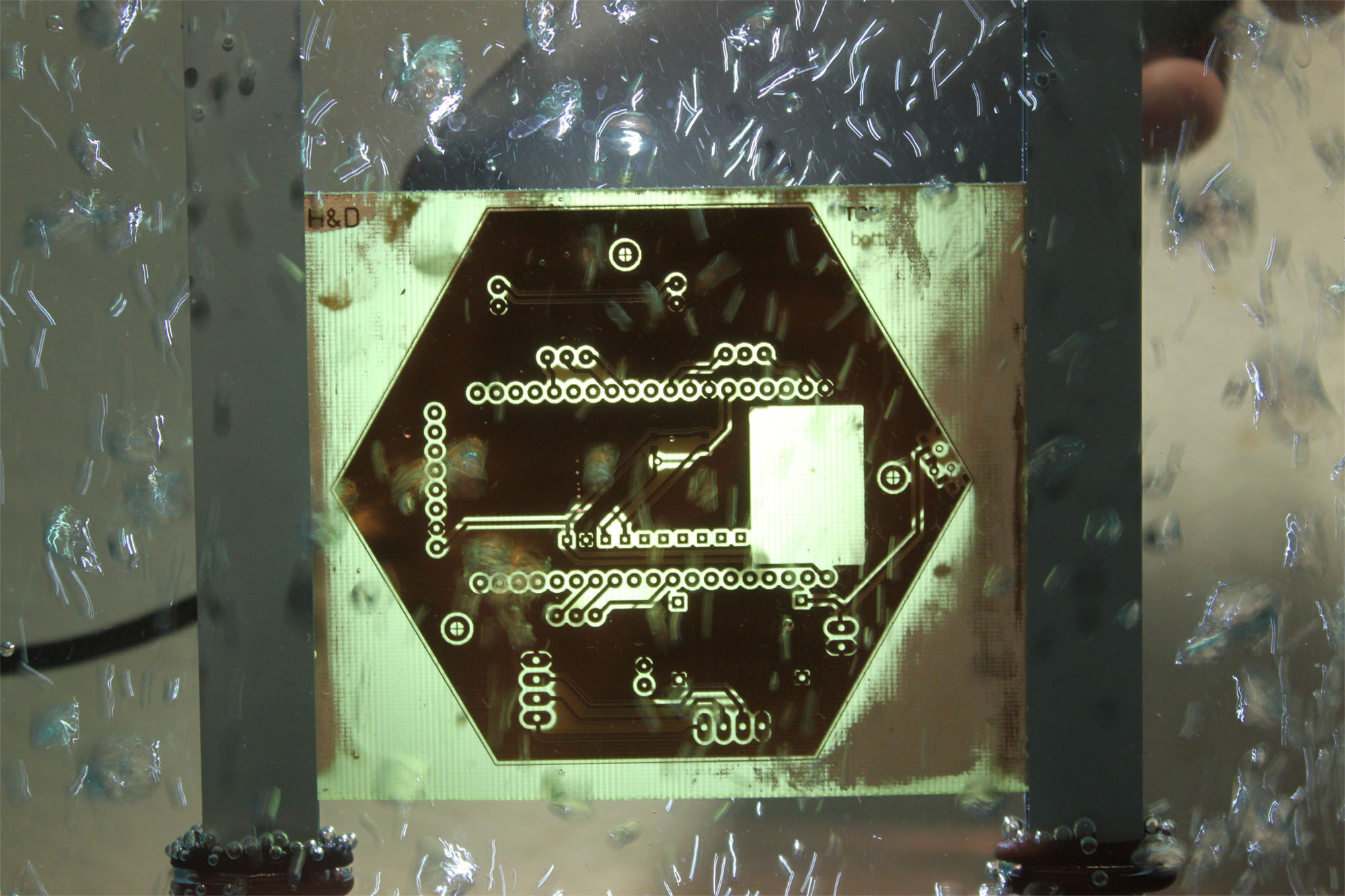

In our special case we designed a double sided PCB which can have circuits on either side that are connected through pins. It is necessary that both sides are perfectly aligned during exposure, which can be achieved by building a kind of bag from the transparency foils with transparent tape.

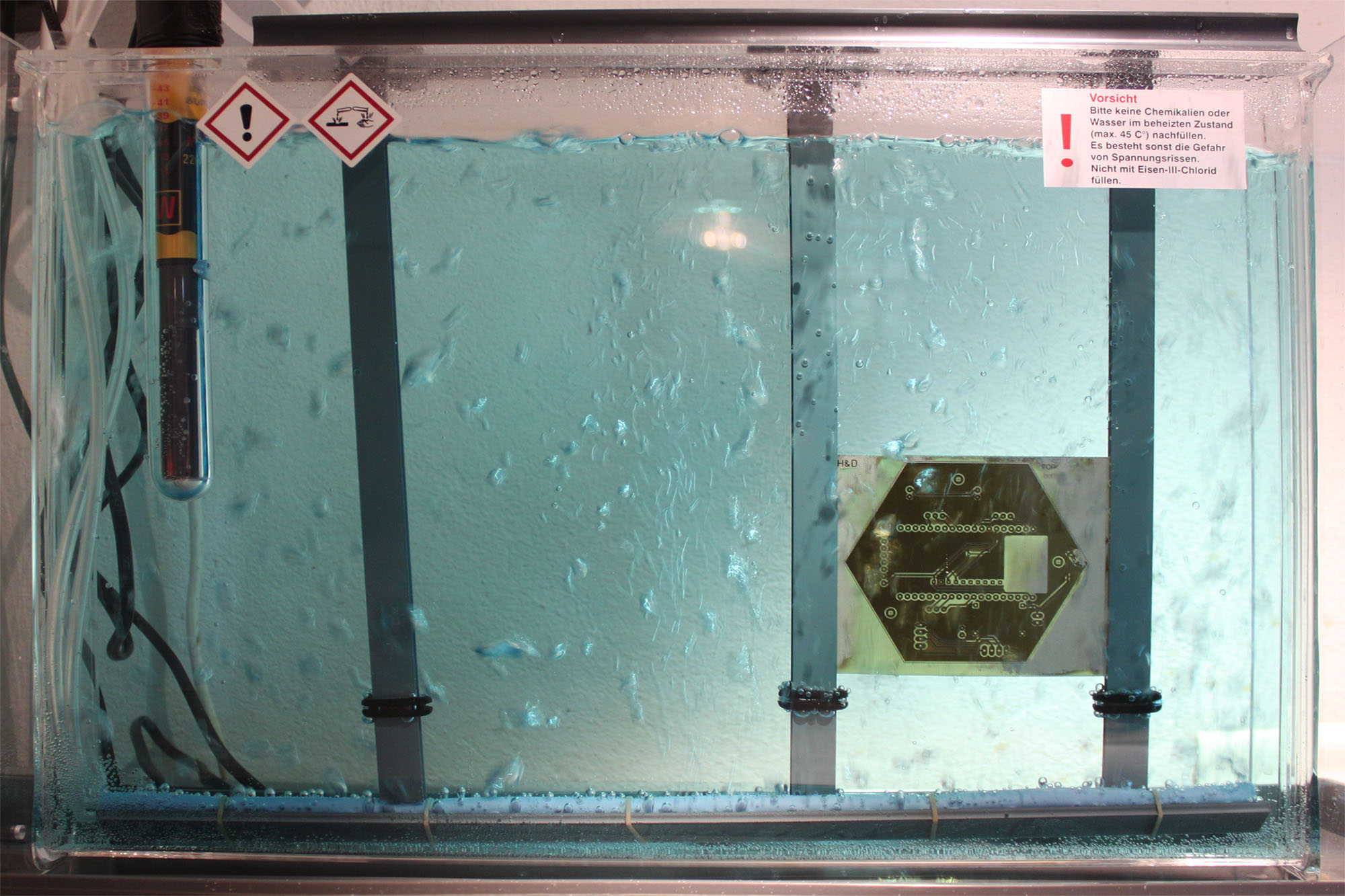

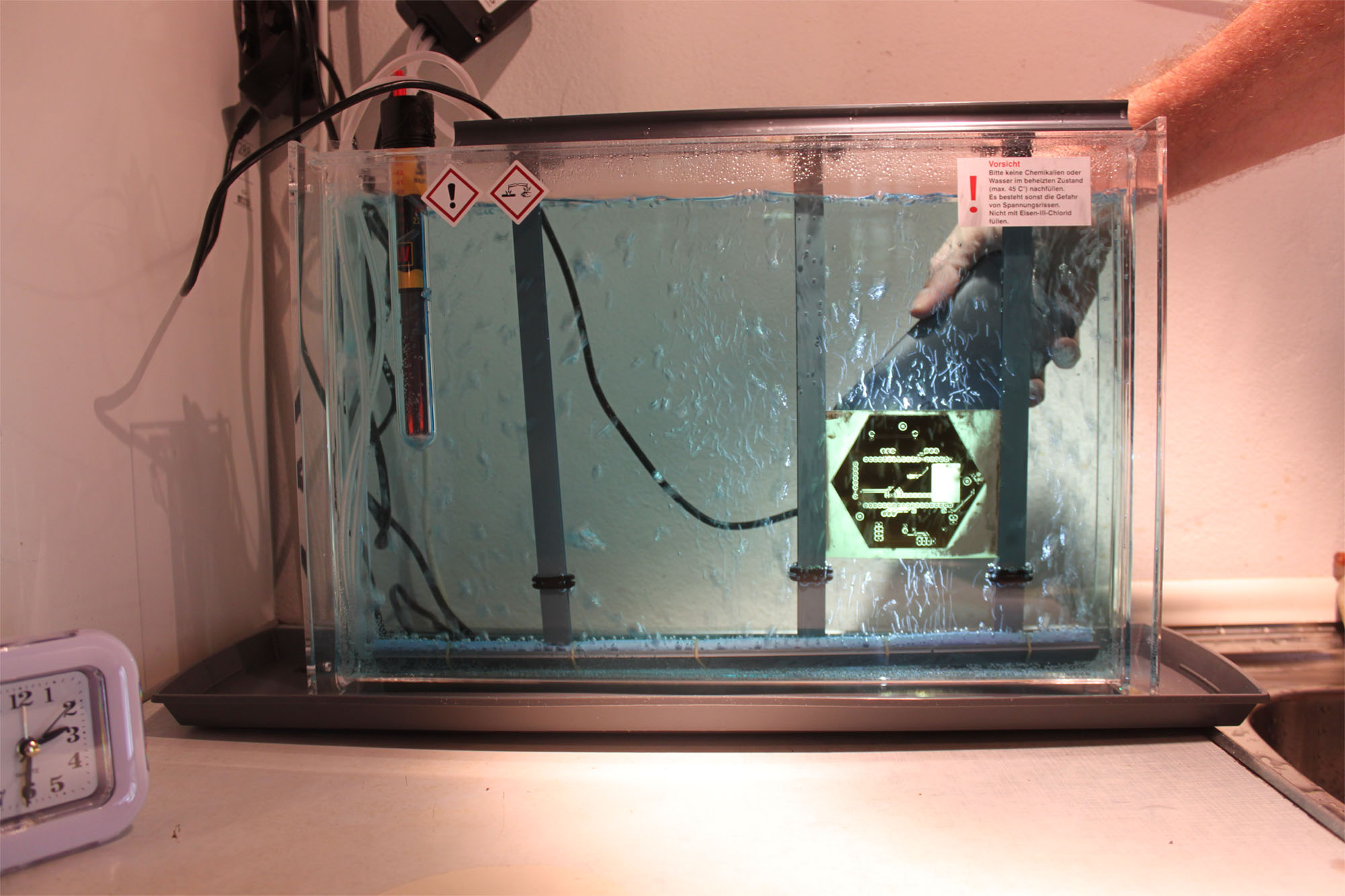

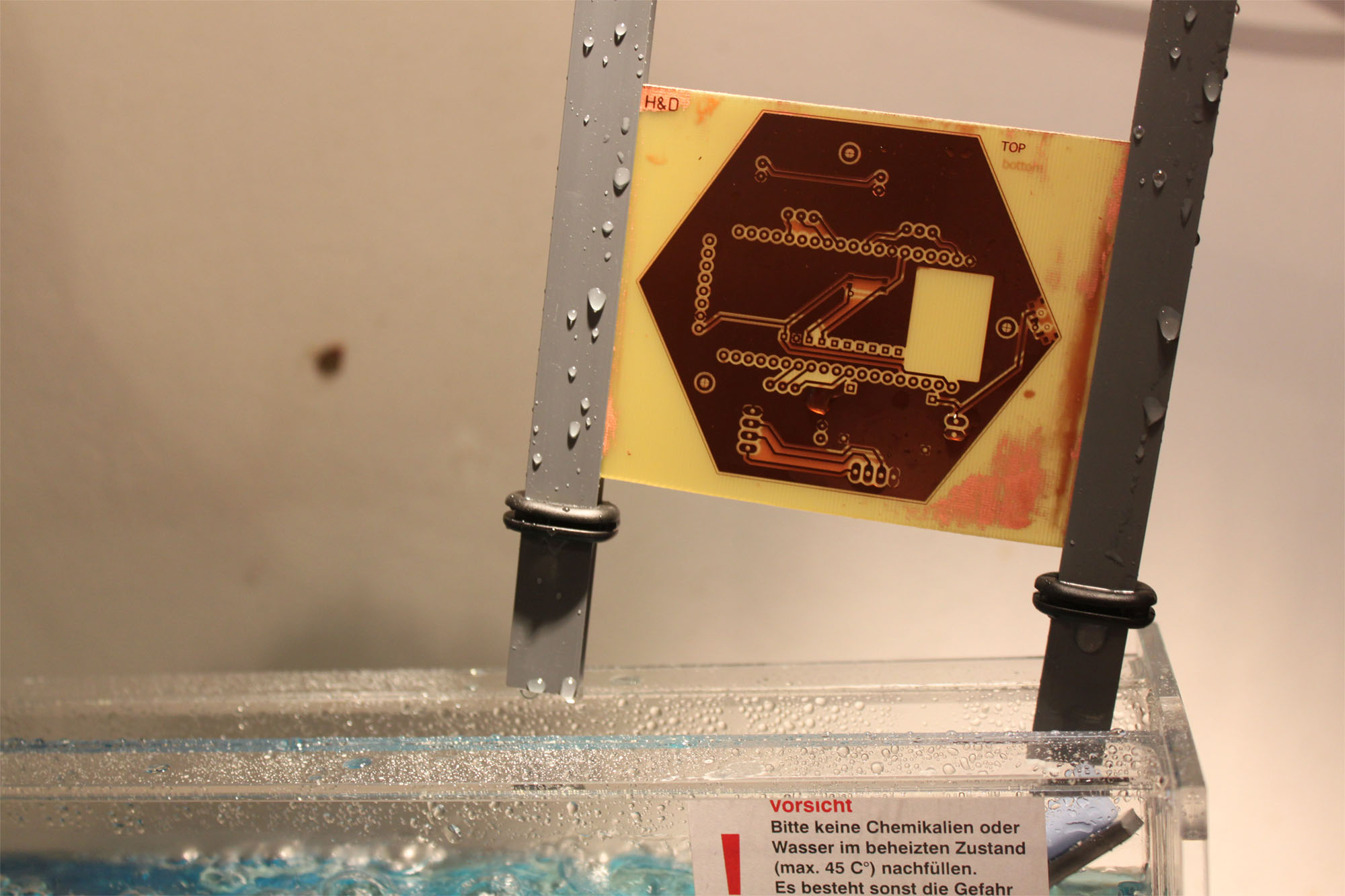

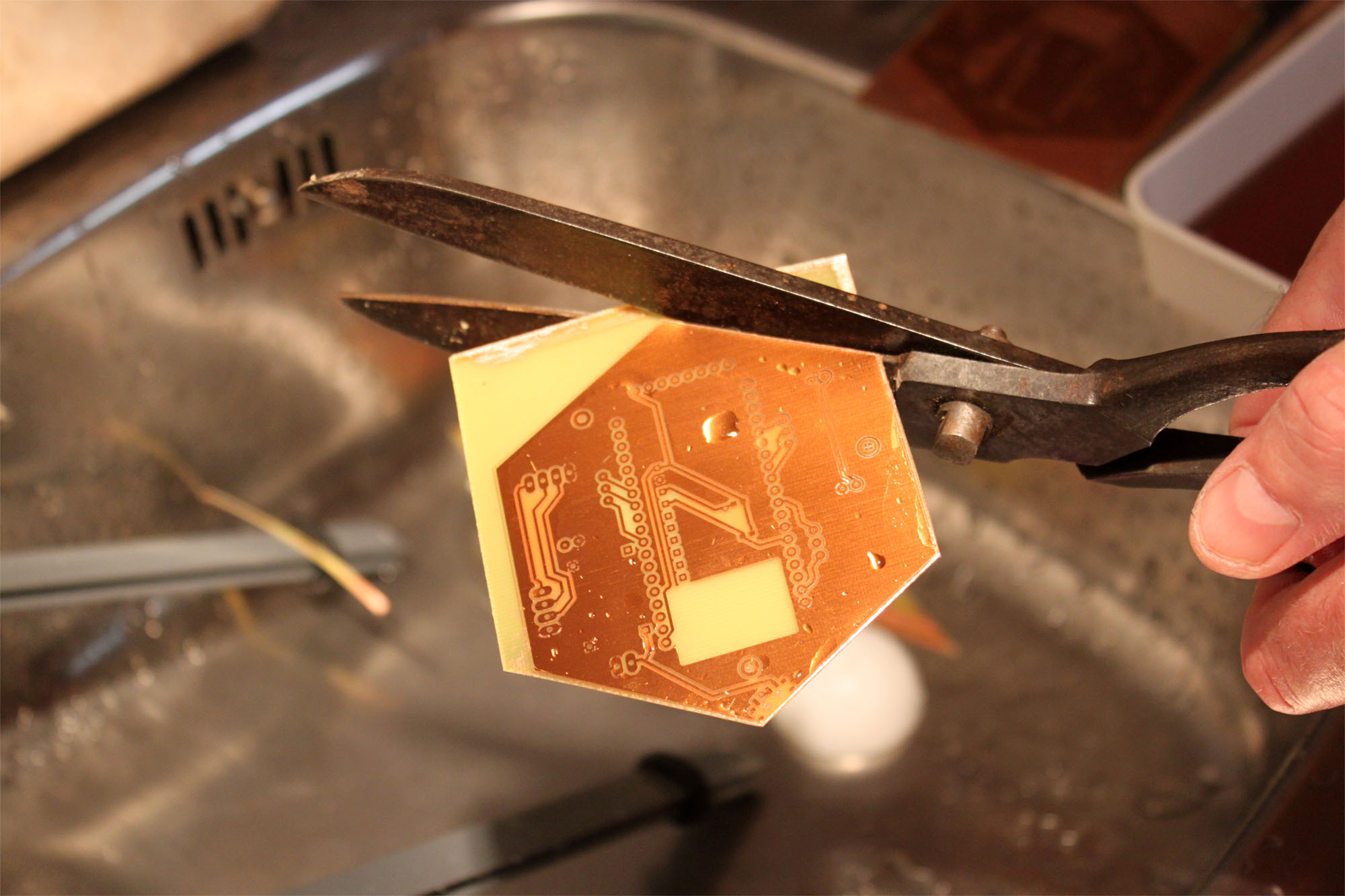

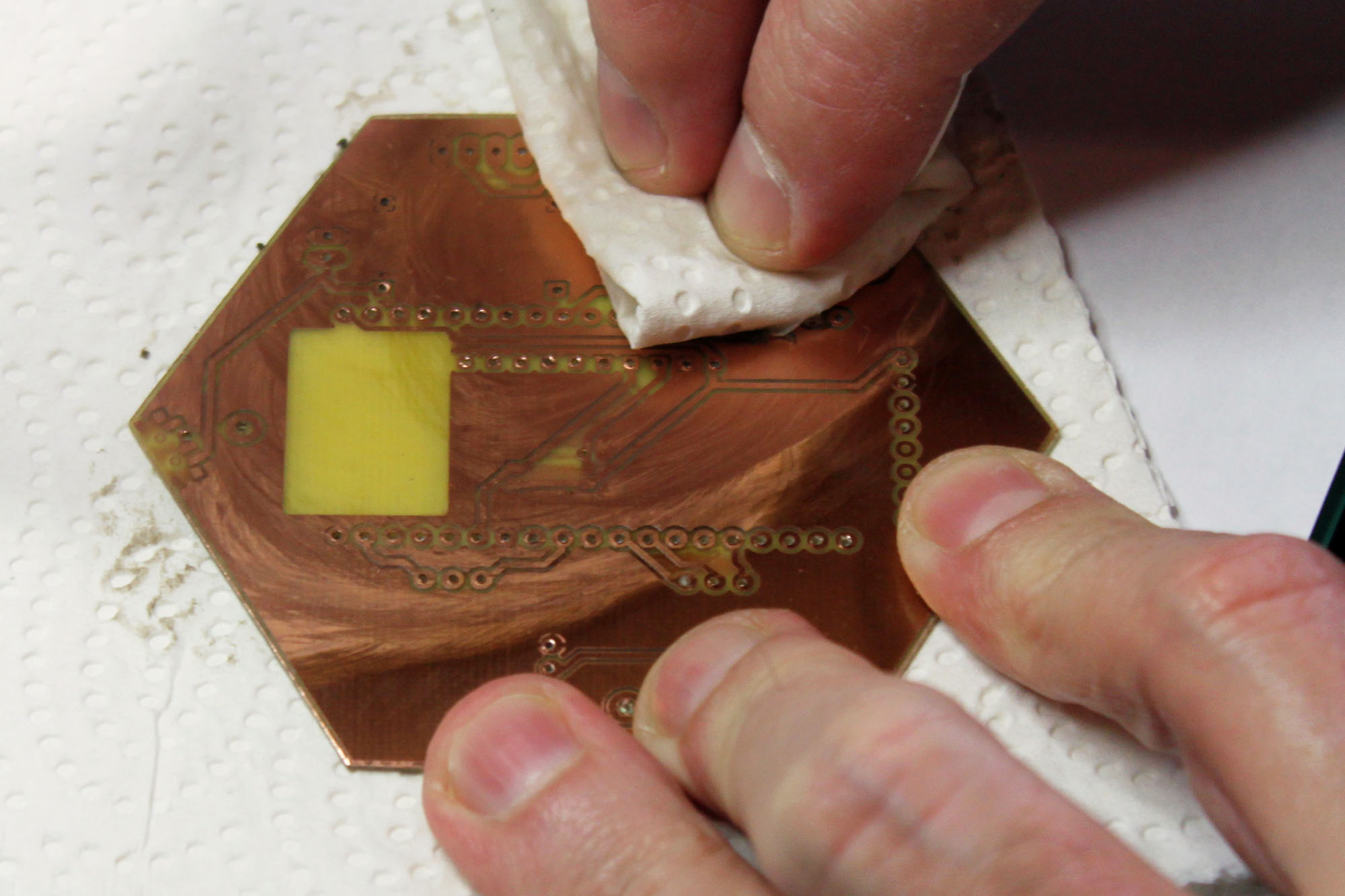

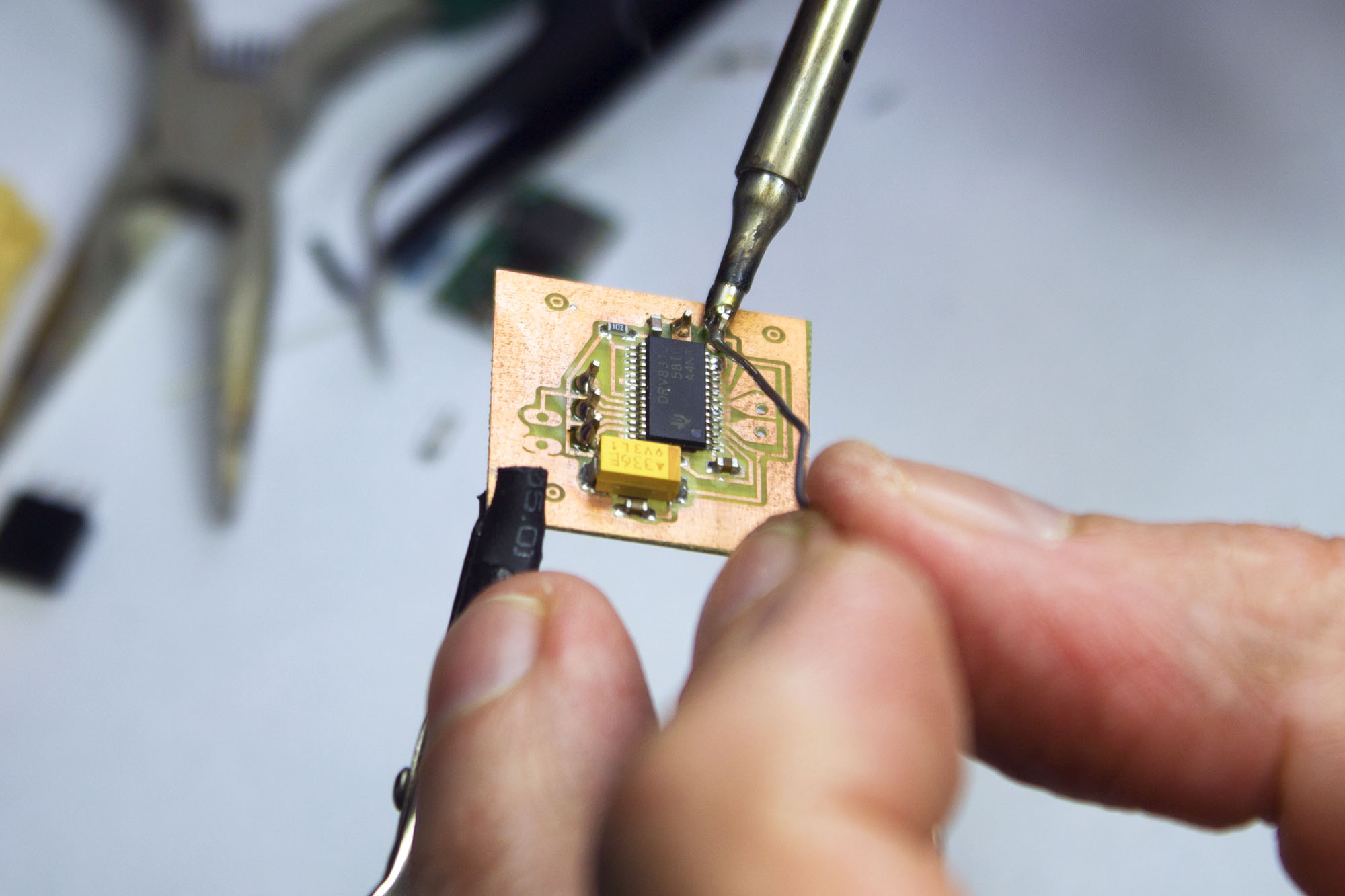

After exposure the pre-sensitized PCB is put into the etching tank until all the exposed parts are etched away while the shiny copper of the designed paths stays. When you can see light shining through from behind, you know that your board etched enough. We choose a quite thin board which we cut into shape after the etching process with a pair of scissors. The remaining photoresist layer needs to be wiped off with acetone in the end to make better soldering connections possible. Then the holes to connect both sides of the board need to be drilled and the PCB is ready for the electronic parts.

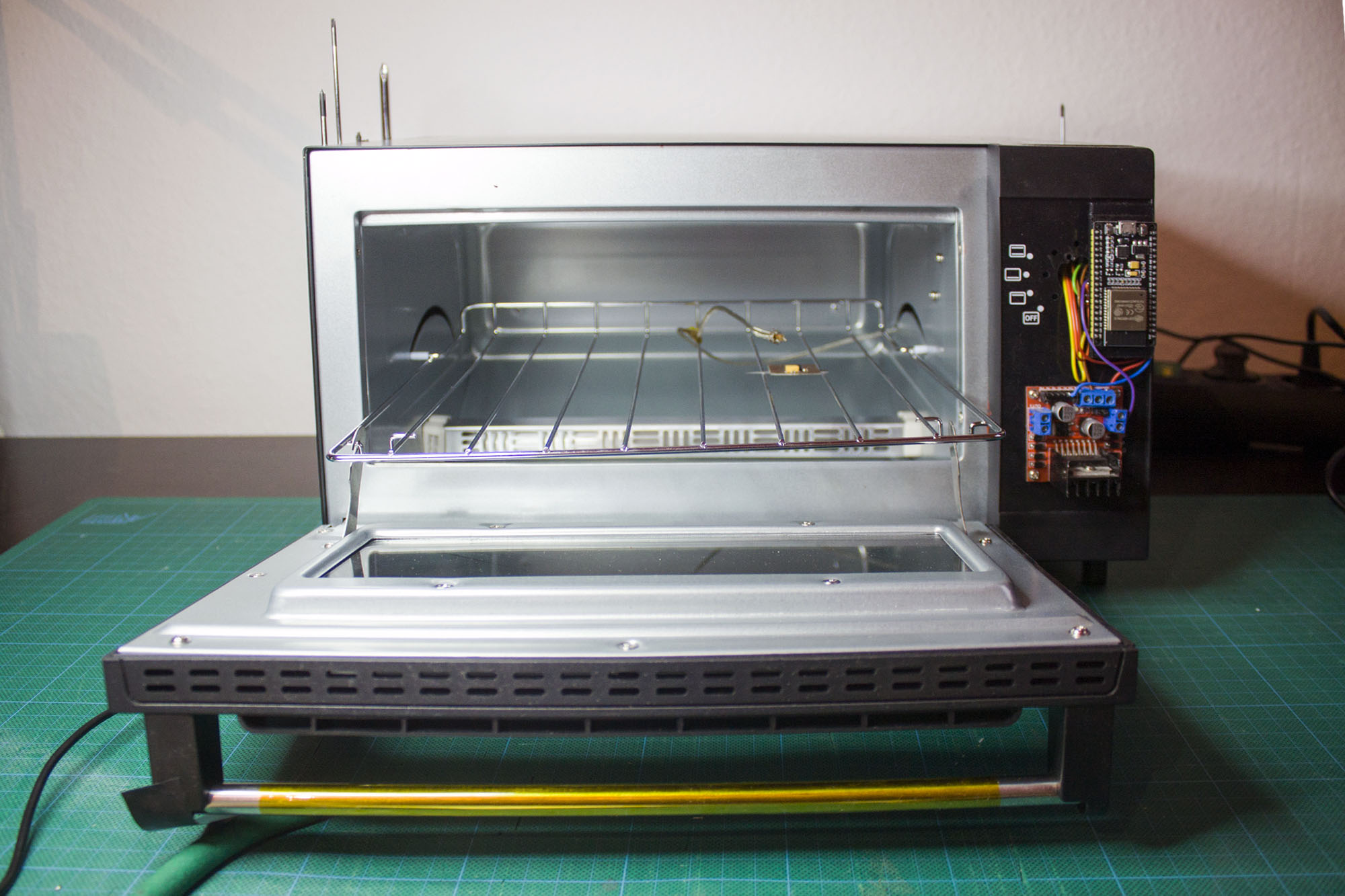

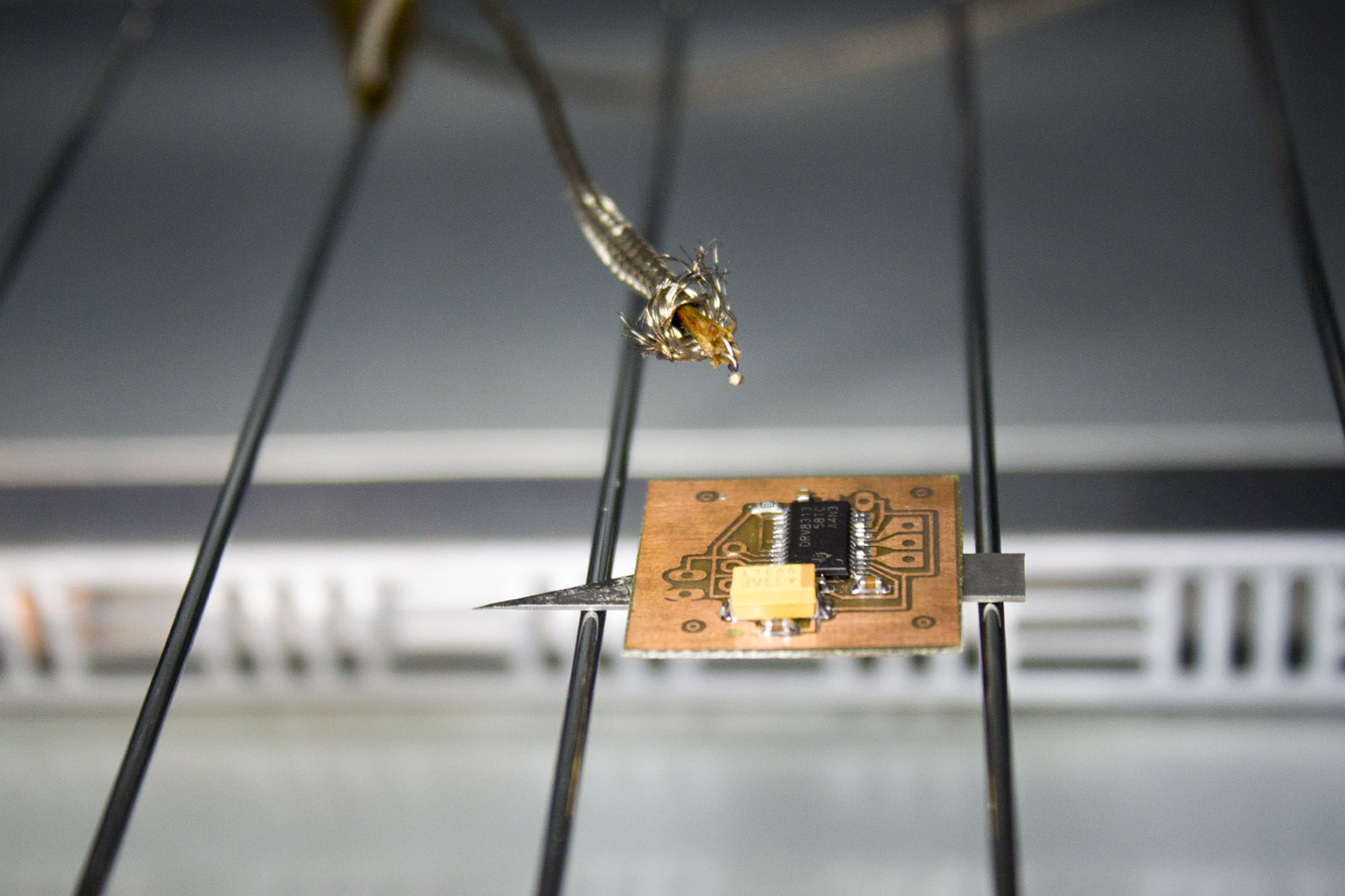

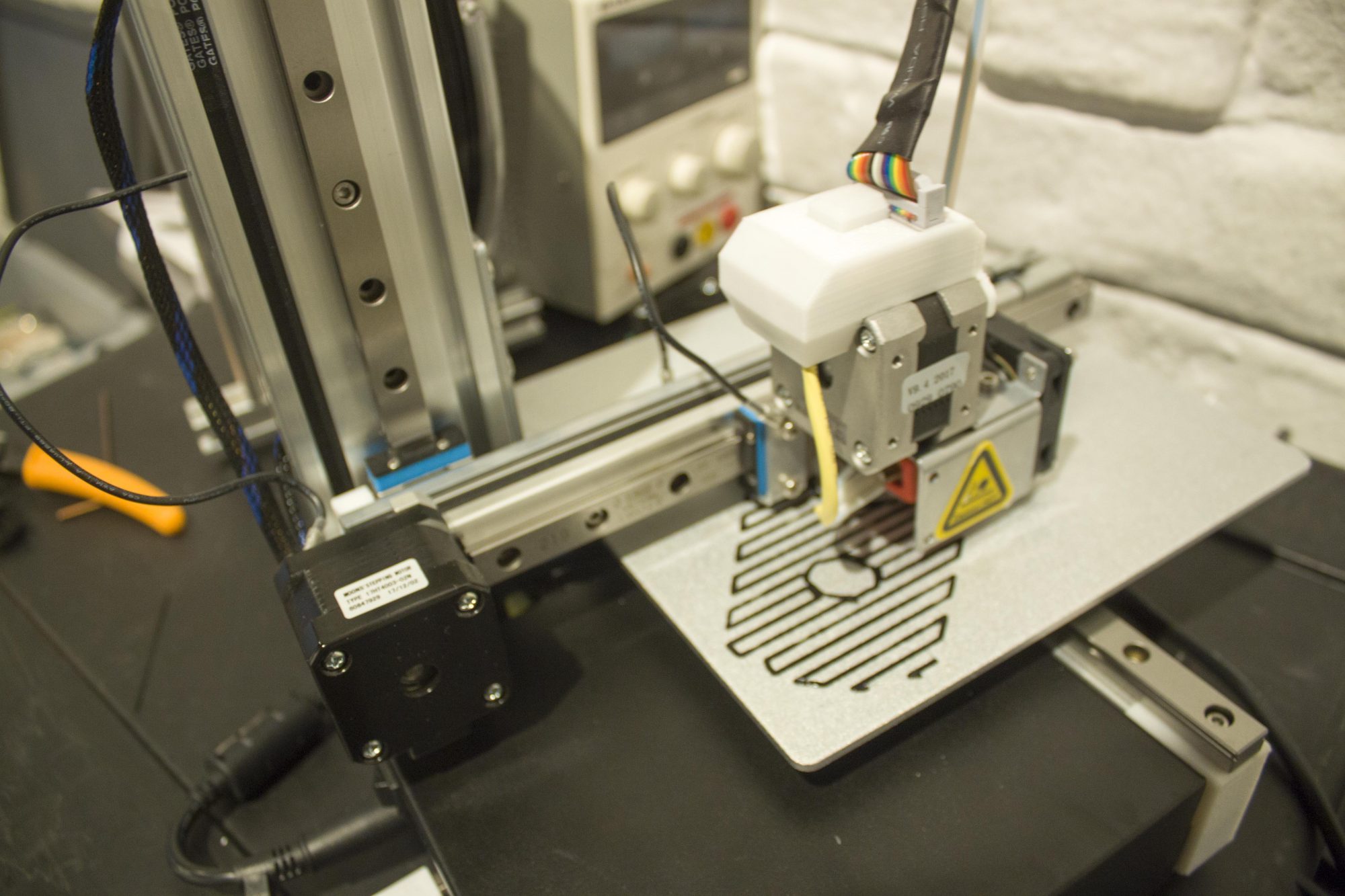

In order to reduce size and weight of the robot as much as possible we decided to become as small as possible and to use SMD (Surface Mount Device) parts. These are so tiny that it is difficult to solder them onto the board manually. That for we build a temperature controlled SMD reflow oven, which melts the parts onto the board for you instead. 🙂